Lagrange’s DeepProve: AI You Can Prove

May 27, 2025

.webp)

Lagrange Labs launched DeepProve in March 2025. Read below for a closer look into how DeepProve works.

Trust in AI will only come through cryptographic verification.

Problem: AI Needs to be Trustworthy

As AI becomes increasingly embedded into our everyday lives, we are presented with one critical question: How can we trust the outputs of the AI models we rely on?

In order to trust AI, we must be able to verify the correctness of its answers and the models it runs. Verification, however, has remained a longstanding obstacle to developing safe AI. Traditional methods of verification often require access to sensitive model information, potentially exposing intellectual property or proprietary logic, while the methods of securing such information are often too technically advanced for those who need it.

Efforts to build trust in AI have focused on localized, institution-driven assurances: users are expected to believe that the AI systems they engage with are giving them the correct answers and using the correct models because institutions say so. Ultimately, this design of trust relies on policy over proof, and has no guarantees.

What we need is a global, auditable, neutral, and technical foundation for proving the correctness of AI outputs—something that can shift AI trust from centralized assurances to cryptographic guarantees. The solution is Lagrange’s DeepProve.

Solution: Enable AI You Can Prove (Lagrange’s DeepProve)

DeepProve—a zero-knowledge machine learning (zkML) inference framework built by Lagrange—proves neural network inferences with cryptographic proofs. The system allows someone to prove that “the output Y came from running the model on input X,” all the while keeping the model’s weights protected, bringing verifiability and privacy to AI models everywhere. DeepProve is up to 1000x faster at proof generation, up to 671x faster at proof verification, and up to 1150x faster at one-time setup than the baseline.

.webp)

DeepProve utilizes ZK proofs to verify that an AI model produced a specific output from a specific input—without revealing the model weights. This makes AI outputs verifiable, while keeping the model private—ideal for applications of any kind. The primary cryptographic techniques are:

- Sum-check Protocol: The sum-check protocol is a classic interactive proof technique used to verify the correctness of a sum over a multivariate polynomial without revealing the inputs. In DeepProve, this protocol is adapted to efficiently verify linear machine learning computations by representing them as structured polynomial evaluations.

- Lookup Argument: Lookup arguments enhance the efficiency of proof generation in DeepProve by enabling the system to verify whether specific outputs have been previously computed and stored, so as to avoid recomputing them from scratch. This approach is especially effective for verifying non-linear operations common in machine learning, such as activation functions (e.g., ReLU, softmax) and normalization steps.

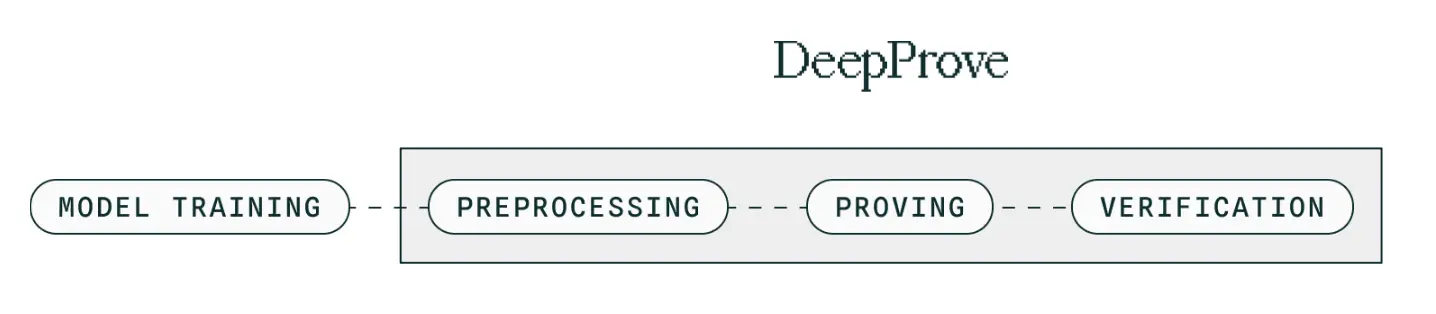

How DeepProve Works

- Preprocessing: First, a trained ML model is exported as an ONNX file, along with sample inputs. During preprocessing, the ONNX graph is parsed (prepared for efficient proof generation), the sample inputs are used to compute the quantized version of the model, and prover/verifier keys are generated. Preprocessing is a one-time step that does not need to be repeated.

- Proving: After preprocessing, DeepProve runs the model on a given input and records how each node of the neural network processes data (also known as an "execution trace"). For every node, DeepProve generates a cryptographic proof that the computation was done correctly. These individual proofs are then aggregated into a single, succinct proof that represents the entire model's correct execution.

- Verification: Once the succinct proof is generated, verification is completed using the model’s input and output, the model’s commitment (that uniquely represents the model without revealing any of its internal details), and verification keys. This process confirms that the inference was computed correctly—without ever revealing the input data or the model itself.

In simpler terms, all developers need to do is: Export your model to ONNX → Complete a one-time setup → Generate proofs → Verify AI inferences anywhere.

Scalability and Specialization—A Matchmade in Heaven

Although proving AI outputs with ZK cryptography is critical to safe AI, it is a resource-intensive process. DeepProve ensures that verifiable AI is as resource-efficient as possible by leveraging the Lagrange Prover Network (LPN) to offload and distribute the heavy lifting of proving. The result is an AI verification process that is fast, scalable, affordable, and widely accessible.

At its core, the Lagrange Prover Network is made up of specialized nodes (provers) that generate ZK proofs on demand. These provers act like a decentralized cloud, while lightweight verifiers check the results. By distributing proof generation across many provers, LPN a) eliminates bottlenecks, b) decreases the cost-per-proof, c) guarantees decentralization and d) enables provers to optimize for specific models or hardware. In detail, DeepProve is able to split an AI inference into multiple parts, prove each part independently with parallel machines utilizing the LPN, and aggregate those parts into a single, compact proof to present to the user.

DeepProve further benefits from the LPN's ability to deploy specialized groups of provers, in that a dedicated, modular layer of provers can be designed to specifically support DeepProve’s zkML workloads. This allows provers to optimize for different models and hardware setups—like GPUs or ASICs—enabling faster, more efficient proof generation. By tailoring the network to the unique demands of verifiable AI, LPN ensures DeepProve remains scalable, performant, and adaptable as AI and ZK systems continue to evolve.

Clients and provers who utilize DeepProve also benefit from the LPN’s double-auction mechanism known as DARA (Double Auction Resource Allocation mechanism). Using a knapsack-based allocation algorithm, DARA selects the highest-value matches by ranking proof requesters by willingness to pay and provers by cost per compute cycle. The mechanism enforces truthful bidding through a threshold pricing model—clients pay just enough to win their bids, while provers receive payments tied to market-clearing prices (as opposed to arbitrary offers). This design ensures that both sides maximize utility: clients minimize costs without gaming the system and provers receive market-competitive prices. By aligning incentives and guaranteeing fair pricing, DARA creates an efficient, non-extractive marketplace for cryptographic proofs.

In short, LPN ensures that DeepProve can continue to deliver cryptographic guarantees at speed and scale as the demand for verifiable AI grows, far beyond what any single system could support.

The Role of the $LA Token

When clients request AI inference proofs, they pay fees proportional to the compute required—typically in $ETH, $USDC, or $LA. Regardless of the payment token, all provers are ultimately compensated in $LA. To further subsidize costs, the network emits a fixed 4% annual supply of $LA, distributed to provers based on the volume of proofs they generate. This emission model allows clients to pay only a portion of the true cost, while provers are fully rewarded. Token holders can also stake or delegate $LA to specific provers, directing emissions towards provers who align with the overall goals of the Lagrange ecosystem.

Read more about the $LA token and its mechanics here.

Don’t Trust AI—Verify It

As AI continues to inform decisions that impact our everyday lives—our health, our finances, our safety—we must be able to prove the outputs it gives us. Imagine a world where hospitals can validate diagnostic results from AI models without leaking patient data; a world where credit decisions from banks are proven to be made fairly; a world where users can trust that deep fakes are detected, and that the chatbots they interact with every day are running the correct models. DeepProve enables what was previously imaginative: AI you can prove.

With the cryptographic guarantee of zero-knowledge proofs and the decentralized power of the Lagrange Prover Network, DeepProve scales where other systems stall, making verifiable AI not just possible, but practical.